Hi Rekan Makers,

Apa kabar? kali ini kita akan melanjutkan series tentang VisionSkills. Jika rekan-rekan belum tahu apa itu windows VisionSkills silakan baca artikel terdahulu disini.

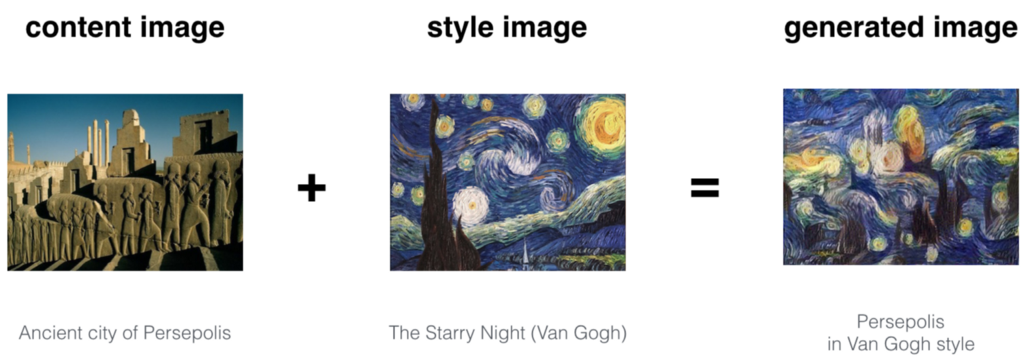

Nah kali ini kita akan mencoba membangun sendiri skill kita dengan memanfaatkan model ML neural-style yang bisa mengombinasikan konten dari 1 gambar dengan pola atau style dari gambar lain dengan convolutional neural networks. Project tentang model-nya sendiri bisa dilihat disini.

Cara kerja custom style yang akan kita buat akan menerima 1 input berupa gambar dari user, dan 1 output berupa gambar yang sudah diproses dengan model style yang dipilih. Saat ini kita gunakan model neural-style existing yang sudah di konversi ke format onnx, modelnya bisa dilihat disini, kita menggunakan opset versi 8. Kita akan gunakan Windows Machine Learning (WinML) untuk melakukan inferensi model dengan format tersebut.

Oke, mari kita mulai membuatnya, ikuti langkah berikut:

Silakan buka aplikasi visual studio 2017 atau 2019, lalu buat new project > Class Library (Universal Windows). Beri nama “NeuralStyleTransformer”.

Install nuget package “Microsoft.AI.Skills.SkillInterface”, centang include prerelease.

Buatlah folder dengan nama “Models”, lalu tambahkan file-file onnx dari repo ini.

Kemudian buatlah class baru dengan nama “NeuralStyleTransformerConst” masukan kode berikut:

using System;

using System.Collections.Generic;

using System.Linq;

using System.Text;

using System.Threading.Tasks;

namespace NeuralStyleTransformer

{

public static class NeuralStyleTransformerConst

{

public const string WINML_MODEL_INPUTNAME = "input1";

public const string WINML_MODEL_OUTPUTNAME = "output1";

public const string SKILL_INPUTNAME_IMAGE = "InputImage";

public const string SKILL_OUTPUTNAME_IMAGE = "OutputImage";

public const int IMAGE_WIDTH = 224;

public const int IMAGE_HEIGHT = 224;

}

public enum StyleChoices

{

Candy=0,Mosaic,Pointilism, RainPrincess, Udnie

}

}

Class tersebut menampung beberapa konstanta yang kita butuhkan untuk inferensi model. Lalu tambahkan lagi class dengan nama “NeuralStyleTransformerBinding” masukan kode berikut:

using System.Collections;

using System.Collections.Generic;

using Microsoft.AI.Skills.SkillInterfacePreview;

using Windows.AI.MachineLearning;

using System.Linq;

using Windows.Foundation;

using Windows.Media;

#pragma warning disable CS1591 // Disable missing comment warning

namespace NeuralStyleTransformer

{

/// <summary>

/// NeuralStyleTransformerBinding class.

/// It holds the input and output passed and retrieved from a NeuralStyleTransformerSkill instance.

/// </summary>

public sealed class NeuralStyleTransformerBinding : IReadOnlyDictionary<string, ISkillFeature>, ISkillBinding

{

// WinML related

internal LearningModelBinding m_winmlBinding = null;

private VisionSkillBindingHelper m_bindingHelper = null;

/// <summary>

/// NeuralStyleTransformerBinding constructor

/// </summary>

internal NeuralStyleTransformerBinding(

ISkillDescriptor descriptor,

ISkillExecutionDevice device,

LearningModelSession session)

{

m_bindingHelper = new VisionSkillBindingHelper(descriptor, device);

// Create WinML binding

m_winmlBinding = new LearningModelBinding(session);

}

/// <summary>

/// Sets the input image to be processed by the skill

/// </summary>

/// <param name="frame"></param>

/// <returns></returns>

public IAsyncAction SetInputImageAsync(VideoFrame frame)

{

return m_bindingHelper.SetInputImageAsync(frame);

}

/// <summary>

/// Returns transformed image

/// </summary>

/// <returns></returns>

public VideoFrame GetTransformedImage

{

get

{

ISkillFeature feature = null;

if (m_bindingHelper.TryGetValue(NeuralStyleTransformerConst.SKILL_OUTPUTNAME_IMAGE, out feature))

{

return (feature.FeatureValue as SkillFeatureImageValue).VideoFrame;

}

else

{

return null;

}

}

}

// interface implementation via composition

#region InterfaceImpl

// ISkillBinding

public ISkillExecutionDevice Device => m_bindingHelper.Device;

// IReadOnlyDictionary

public bool ContainsKey(string key)

{

return m_bindingHelper.ContainsKey(key);

}

public bool TryGetValue(string key, out ISkillFeature value)

{

return m_bindingHelper.TryGetValue(key, out value);

}

public ISkillFeature this[string key] => m_bindingHelper[key];

public IEnumerable<string> Keys => m_bindingHelper.Keys;

public IEnumerable<ISkillFeature> Values => m_bindingHelper.Values;

public int Count => m_bindingHelper.Count;

public IEnumerator<KeyValuePair<string, ISkillFeature>> GetEnumerator()

{

return m_bindingHelper.AsEnumerable().GetEnumerator();

}

IEnumerator IEnumerable.GetEnumerator()

{

return m_bindingHelper.AsEnumerable().GetEnumerator();

}

#endregion InterfaceImpl

// end of implementation of interface via composition

}

}

Class ini digunakan untuk memasukan data input (gambar) dan mengambil output hasil inferensi model atau istilah umumnya adalah binding.

Lalu buatlah class lagi dengan nama “NeuralStyleTransformerSkill” masukan kode berikut:

using System;

using System.Collections.Generic;

using System.Diagnostics;

using System.Runtime.InteropServices.WindowsRuntime;

using System.Threading.Tasks;

using Microsoft.AI.Skills.SkillInterfacePreview;

using Windows.AI.MachineLearning;

using Windows.Foundation;

using Windows.Foundation.Collections;

using Windows.Graphics.Imaging;

using Windows.Media;

using Windows.Media.FaceAnalysis;

using Windows.Storage;

namespace NeuralStyleTransformer

{

/// <summary>

/// NeuralStyleTransformerSkill class.

/// Contains the main execution logic of the skill, get input image then running an ML model to transfer style

/// Also acts as a factory for NeuralStyleTransformerBinding

/// </summary>

public sealed class NeuralStyleTransformerSkill : ISkill

{

// WinML related members

private LearningModelSession m_winmlSession = null;

static VideoFrame _outputFrame = null;

/// <summary>

/// Creates and initializes a NeuralStyleTransformerSkill instance

/// </summary>

/// <param name="descriptor"></param>

/// <param name="device"></param>

/// <returns></returns>

internal static IAsyncOperation<NeuralStyleTransformerSkill> CreateAsync(

ISkillDescriptor descriptor,

ISkillExecutionDevice device, StyleChoices Mode)

{

return AsyncInfo.Run(async (token) =>

{

// Create instance

var skillInstance = new NeuralStyleTransformerSkill(descriptor, device);

// Load WinML model

var modelName = "candy.onnx";

switch (Mode)

{

case StyleChoices.Candy:

modelName = "candy.onnx";

break;

case StyleChoices.Mosaic:

modelName = "mosaic.onnx";

break;

case StyleChoices.Pointilism:

modelName = "pointilism.onnx";

break;

case StyleChoices.RainPrincess:

modelName = "rain_princess.onnx";

break;

case StyleChoices.Udnie:

modelName = "udnie.onnx";

break;

}

var modelFile = await StorageFile.GetFileFromApplicationUriAsync(new Uri($"ms-appx:///NeuralStyleTransformer/Models/{modelName}"));

var winmlModel = LearningModel.LoadFromFilePath(modelFile.Path);

// Create WinML session

skillInstance.m_winmlSession = new LearningModelSession(winmlModel, GetWinMLDevice(device));

// Create output frame

_outputFrame?.Dispose();

_outputFrame = new VideoFrame(BitmapPixelFormat.Bgra8, NeuralStyleTransformerConst.IMAGE_WIDTH, NeuralStyleTransformerConst.IMAGE_HEIGHT);

return skillInstance;

});

}

/// <summary>

/// NeuralStyleTransformerSkill constructor

/// </summary>

/// <param name="description"></param>

/// <param name="device"></param>

private NeuralStyleTransformerSkill(

ISkillDescriptor description,

ISkillExecutionDevice device)

{

SkillDescriptor = description;

Device = device;

}

/// <summary>

/// Factory method for instantiating NeuralStyleTransformerBinding

/// </summary>

/// <returns></returns>

public IAsyncOperation<ISkillBinding> CreateSkillBindingAsync()

{

return AsyncInfo.Run((token) =>

{

var completedTask = new TaskCompletionSource<ISkillBinding>();

completedTask.SetResult(new NeuralStyleTransformerBinding(SkillDescriptor, Device, m_winmlSession));

return completedTask.Task;

});

}

/// <summary>

/// Evaluate input image, process (inference) then bind to output

/// </summary>

/// <param name="binding"></param>

/// <returns></returns>

public IAsyncAction EvaluateAsync(ISkillBinding binding)

{

NeuralStyleTransformerBinding bindingObj = binding as NeuralStyleTransformerBinding;

if (bindingObj == null)

{

throw new ArgumentException("Invalid ISkillBinding parameter: This skill handles evaluation of NeuralStyleTransformerBinding instances only");

}

return AsyncInfo.Run(async (token) =>

{

// Retrieve input frame from the binding object

VideoFrame inputFrame = (binding[NeuralStyleTransformerConst.SKILL_INPUTNAME_IMAGE].FeatureValue as SkillFeatureImageValue).VideoFrame;

SoftwareBitmap softwareBitmapInput = inputFrame.SoftwareBitmap;

// Retrieve a SoftwareBitmap to run face detection

if (softwareBitmapInput == null)

{

if (inputFrame.Direct3DSurface == null)

{

throw (new ArgumentNullException("An invalid input frame has been bound"));

}

softwareBitmapInput = await SoftwareBitmap.CreateCopyFromSurfaceAsync(inputFrame.Direct3DSurface);

}

// Retrieve output image from model

var transformedImage = binding[NeuralStyleTransformerConst.SKILL_OUTPUTNAME_IMAGE];

// Bind the WinML input frame

bindingObj.m_winmlBinding.Bind(

NeuralStyleTransformerConst.WINML_MODEL_INPUTNAME, // WinML feature name

inputFrame);

ImageFeatureValue outputImageFeatureValue = ImageFeatureValue.CreateFromVideoFrame(_outputFrame);

bindingObj.m_winmlBinding.Bind(NeuralStyleTransformerConst.WINML_MODEL_OUTPUTNAME, outputImageFeatureValue);

// Run WinML evaluation

var winMLEvaluationResult = await m_winmlSession.EvaluateAsync(bindingObj.m_winmlBinding, "0");

// Parse result

IReadOnlyDictionary<string, object> outputs = winMLEvaluationResult.Outputs;

foreach (var output in outputs)

{

Debug.WriteLine($"{output.Key} : {output.Value} -> {output.Value.GetType()}");

}

//set model output to skill output

await transformedImage.SetFeatureValueAsync(_outputFrame);

});

}

/// <summary>

/// Returns the descriptor of this skill

/// </summary>

public ISkillDescriptor SkillDescriptor { get; private set; }

/// <summary>

/// Return the execution device with which this skill was initialized

/// </summary>

public ISkillExecutionDevice Device { get; private set; }

/// <summary>

/// If possible, retrieves a WinML LearningModelDevice that corresponds to an ISkillExecutionDevice

/// </summary>

/// <param name="executionDevice"></param>

/// <returns></returns>

private static LearningModelDevice GetWinMLDevice(ISkillExecutionDevice executionDevice)

{

switch (executionDevice.ExecutionDeviceKind)

{

case SkillExecutionDeviceKind.Cpu:

return new LearningModelDevice(LearningModelDeviceKind.Cpu);

case SkillExecutionDeviceKind.Gpu:

{

var gpuDevice = executionDevice as SkillExecutionDeviceDirectX;

return LearningModelDevice.CreateFromDirect3D11Device(gpuDevice.Direct3D11Device);

}

default:

throw new ArgumentException("Passing unsupported SkillExecutionDeviceKind");

}

}

}

}

Class ini berisi fungsi-fungsi utama dalam custom skill yang kita buat. Fungsinya antara lain : konstruktor – membuat skill sesuai style yang dipilih dan device yang digunakan, evaluasi model.

Kemudian buatlah class lagi dengan nama “NeuralStyleTransformerDescriptor”, masukan kode berikut:

using System;

using System.Collections.Generic;

using System.Linq;

using System.Runtime.InteropServices.WindowsRuntime;

using System.Threading.Tasks;

using Microsoft.AI.Skills.SkillInterfacePreview;

using Windows.Foundation;

using Windows.Graphics.Imaging;

namespace NeuralStyleTransformer

{

/// <summary>

/// NeuralStyleTransformerDescriptor class.

/// Exposes information about the skill and its input/output variable requirements.

/// Also acts as a factory for NeuralStyleTransformerSkill

/// </summary>

public sealed class NeuralStyleTransformerDescriptor : ISkillDescriptor

{

// Member variables

private List<ISkillFeatureDescriptor> m_inputSkillDesc;

private List<ISkillFeatureDescriptor> m_outputSkillDesc;

StyleChoices styleChoice;

public void SetStyle(StyleChoices styleChoice)

{

this.styleChoice = styleChoice;

}

/// <summary>

/// NeuralStyleTransformerDescriptor constructor

/// </summary>

public NeuralStyleTransformerDescriptor(StyleChoices styleChoice=StyleChoices.Candy)

{

Name = "NeuralStyleTransformer";

Description = "Transform your image to an art";

// {F8D275CE-C244-4E71-8A39-57335D291387}

Id = new Guid(0xf8d275ce, 0xc244, 0x4e71, 0x8a, 0x39, 0x57, 0x33, 0x5d, 0x29, 0x13, 0x87);

Version = SkillVersion.Create(

0, // major version

1, // minor version

"Makers ID", // Author name

"Buitenzorg Makers Club" // Publisher name

);

// Describe input feature

m_inputSkillDesc = new List<ISkillFeatureDescriptor>();

m_inputSkillDesc.Add(

new SkillFeatureImageDescriptor(

NeuralStyleTransformerConst.SKILL_INPUTNAME_IMAGE,

"the input image onto which the model runs",

true, // isRequired (since this is an input, it is required to be bound before the evaluation occurs)

-1, // width

-1, // height

-1, // maxDimension

BitmapPixelFormat.Nv12,

BitmapAlphaMode.Ignore)

);

// Describe first output feature

m_outputSkillDesc = new List<ISkillFeatureDescriptor>();

m_outputSkillDesc.Add(

new SkillFeatureImageDescriptor(

NeuralStyleTransformerConst.SKILL_OUTPUTNAME_IMAGE,

"a transformed image",

true, // isRequired

-1, // width

-1, // height

-1, // maxDimension

BitmapPixelFormat.Nv12,

BitmapAlphaMode.Ignore)

);

//default

this.styleChoice = styleChoice;

}

/// <summary>

/// Retrieves a list of supported ISkillExecutionDevice to run the skill logic on.

/// </summary>

/// <returns></returns>

public IAsyncOperation<IReadOnlyList<ISkillExecutionDevice>> GetSupportedExecutionDevicesAsync()

{

return AsyncInfo.Run(async (token) =>

{

return await Task.Run(() =>

{

var result = new List<ISkillExecutionDevice>();

// Add CPU as supported device

result.Add(SkillExecutionDeviceCPU.Create());

// Retrieve a list of DirectX devices available on the system and filter them by keeping only the ones that support DX12+ feature level

var devices = SkillExecutionDeviceDirectX.GetAvailableDirectXExecutionDevices();

var compatibleDevices = devices.Where((device) => (device as SkillExecutionDeviceDirectX).MaxSupportedFeatureLevel >= D3DFeatureLevelKind.D3D_FEATURE_LEVEL_12_0);

result.AddRange(compatibleDevices);

return result as IReadOnlyList<ISkillExecutionDevice>;

});

});

}

/// <summary>

/// Factory method for instantiating and initializing the skill.

/// Let the skill decide of the optimal or default ISkillExecutionDevice available to use.

/// </summary>

/// <returns></returns>

public IAsyncOperation<ISkill> CreateSkillAsync()

{

return AsyncInfo.Run(async (token) =>

{

var supportedDevices = await GetSupportedExecutionDevicesAsync();

ISkillExecutionDevice deviceToUse = supportedDevices.First();

// Either use the first device returned (CPU) or the highest performing GPU

int powerIndex = int.MaxValue;

foreach (var device in supportedDevices)

{

if (device.ExecutionDeviceKind == SkillExecutionDeviceKind.Gpu)

{

var directXDevice = device as SkillExecutionDeviceDirectX;

if (directXDevice.HighPerformanceIndex < powerIndex)

{

deviceToUse = device;

powerIndex = directXDevice.HighPerformanceIndex;

}

}

}

return await CreateSkillAsync(deviceToUse);

});

}

/// <summary>

/// Factory method for instantiating and initializing the skill.

/// </summary>

/// <param name="executionDevice"></param>

/// <returns></returns>

public IAsyncOperation<ISkill> CreateSkillAsync(ISkillExecutionDevice executionDevice)

{

return AsyncInfo.Run(async (token) =>

{

// Create a skill instance with the executionDevice supplied

var skillInstance = await NeuralStyleTransformerSkill.CreateAsync(this, executionDevice,styleChoice);

return skillInstance as ISkill;

});

}

/// <summary>

/// Returns a description of the skill.

/// </summary>

public string Description { get; private set; }

/// <summary>

/// Returns a unique skill identifier.

/// </summary>

public Guid Id { get; }

/// <summary>

/// Returns a list of descriptors that correlate with each input SkillFeature.

/// </summary>

public IReadOnlyList<ISkillFeatureDescriptor> InputFeatureDescriptors => m_inputSkillDesc;

/// <summary>

/// Returns a set of metadata that may control the skill execution differently than by feeding an input

/// i.e. internal state, sub-process execution frequency, etc.

/// </summary>

public IReadOnlyDictionary<string, string> Metadata => null;

/// <summary>

/// Returns the skill name.

/// </summary>

public string Name { get; private set; }

/// <summary>

/// Returns a list of descriptors that correlate with each output SkillFeature.

/// </summary>

public IReadOnlyList<ISkillFeatureDescriptor> OutputFeatureDescriptors => m_outputSkillDesc;

/// <summary>

/// Returns the Version information of the skill.

/// </summary>

public SkillVersion Version { get; }

}

}

Nah ini class yang berisi fungsi untuk mendeskripsikan info tentang skill, memberi daftar device yang bisa digunakan seperti cpu, gpu, dsb, menginisiasi skill, dan pengaturan beberapa parameter yang dibutuhkan.

Ok custom skill-nya selesai, rekan-rekan tinggal compile saja (Ctrl + B).

Selanjutnya kita akan membuat aplikasi contoh yang akan memanfaatkan skill ini. Aplikasi ini akan bisa menerima input gambar dari camera, ink canvas, gambar (file picker), media. Oke kita lanjutkan kodingnya ya:

Tambahkan project baru dengan nama “NeuralStyleApp” dengan tipe Blank App (Universal Windows).

Lalu klik kanan pada project, add > reference. Pilih Project, lalu centang “NeuralStyleTransformer”. Ok

Tambahkan nuget package dengan nama “Microsoft.Toolkit.Uwp.UI.Controls”

Lalu klik kanan pada folder Assets, add > existing item. Masukan 1 gambar apapun dengan nama “DefaultImage.jpg” lalu set property “Copy to Output Directory” jadi “Copy if newer”. Ini akan menjadi default image saat aplikasi dijalankan.

Lalu kita tambahkan class dengan nama “HelperMethods”, masukan kode berikut:

//*@@@+++@@@@******************************************************************

//

// Microsoft Windows Media Foundation

// Copyright (C) Microsoft Corporation. All rights reserved.

//

//*@@@---@@@@******************************************************************

using System;

using System.Collections.Generic;

using System.Diagnostics;

using System.Runtime.InteropServices.WindowsRuntime;

using Windows.AI.MachineLearning;

using Windows.Foundation;

using Windows.Graphics.Imaging;

using Windows.Media;

using Windows.Storage;

using Windows.Storage.Pickers;

using Windows.Storage.Streams;

using Windows.UI.Core;

using Windows.UI.Xaml.Controls;

using Windows.UI.Xaml.Media.Imaging;

namespace NeuralStyleApp

{

public sealed class ImageHelper

{

/// <summary>

/// Crop image given a imageVariableDescription

/// </summary>

/// <param name="inputVideoFrame"></param>

/// <returns></returns>

public static IAsyncOperation<VideoFrame> CenterCropImageAsync(VideoFrame inputVideoFrame, ImageFeatureDescriptor imageVariableDescription)

{

return CenterCropImageAsync(inputVideoFrame, imageVariableDescription.Width, imageVariableDescription.Height);

}

/// <summary>

/// Crop image given a target width and height

/// </summary>

/// <param name="inputVideoFrame"></param>

/// <returns></returns>

public static IAsyncOperation<VideoFrame> CenterCropImageAsync(VideoFrame inputVideoFrame, uint targetWidth, uint targetHeight)

{

return AsyncInfo.Run(async (token) =>

{

bool useDX = inputVideoFrame.SoftwareBitmap == null;

VideoFrame result = null;

// Center crop

try

{

// Since we will be center-cropping the image, figure which dimension has to be clipped

var frameHeight = useDX ? inputVideoFrame.Direct3DSurface.Description.Height : inputVideoFrame.SoftwareBitmap.PixelHeight;

var frameWidth = useDX ? inputVideoFrame.Direct3DSurface.Description.Width : inputVideoFrame.SoftwareBitmap.PixelWidth;

Rect cropRect = GetCropRect(frameWidth, frameHeight, targetWidth, targetHeight);

BitmapBounds cropBounds = new BitmapBounds()

{

Width = (uint)cropRect.Width,

Height = (uint)cropRect.Height,

X = (uint)cropRect.X,

Y = (uint)cropRect.Y

};

// Create the VideoFrame to be bound as input for evaluation

if (useDX)

{

if (inputVideoFrame.Direct3DSurface == null)

{

throw (new Exception("Invalid VideoFrame without SoftwareBitmap nor D3DSurface"));

}

result = new VideoFrame(BitmapPixelFormat.Bgra8,

(int)targetWidth,

(int)targetHeight,

BitmapAlphaMode.Premultiplied);

}

else

{

result = new VideoFrame(BitmapPixelFormat.Bgra8,

(int)targetWidth,

(int)targetHeight,

BitmapAlphaMode.Premultiplied);

}

await inputVideoFrame.CopyToAsync(result, cropBounds, null);

}

catch (Exception ex)

{

Debug.WriteLine(ex.ToString());

}

return result;

});

}

/// <summary>

/// Calculate the center crop bounds given a set of source and target dimensions

/// </summary>

/// <param name="frameWidth"></param>

/// <param name="frameHeight"></param>

/// <param name="targetWidth"></param>

/// <param name="targetHeight"></param>

/// <returns></returns>

public static Rect GetCropRect(int frameWidth, int frameHeight, uint targetWidth, uint targetHeight)

{

Rect rect = new Rect();

// we need to recalculate the crop bounds in order to correctly center-crop the input image

float flRequiredAspectRatio = (float)targetWidth / targetHeight;

if (flRequiredAspectRatio * frameHeight > (float)frameWidth)

{

// clip on the y axis

rect.Height = (uint)Math.Min((frameWidth / flRequiredAspectRatio + 0.5f), frameHeight);

rect.Width = (uint)frameWidth;

rect.X = 0;

rect.Y = (uint)(frameHeight - rect.Height) / 2;

}

else // clip on the x axis

{

rect.Width = (uint)Math.Min((flRequiredAspectRatio * frameHeight + 0.5f), frameWidth);

rect.Height = (uint)frameHeight;

rect.X = (uint)(frameWidth - rect.Width) / 2; ;

rect.Y = 0;

}

return rect;

}

/// <summary>

/// Pass the input frame to a frame renderer and ensure proper image format is used

/// </summary>

/// <param name="inputVideoFrame"></param>

/// <param name="useDX"></param>

/// <returns></returns>

public static IAsyncAction RenderFrameAsync(FrameRenderer frameRenderer, VideoFrame inputVideoFrame)

{

return AsyncInfo.Run(async (token) =>

{

bool useDX = inputVideoFrame.SoftwareBitmap == null;

if (frameRenderer == null)

{

throw (new InvalidOperationException("FrameRenderer is null"));

}

SoftwareBitmap softwareBitmap = null;

if (useDX)

{

softwareBitmap = await SoftwareBitmap.CreateCopyFromSurfaceAsync(inputVideoFrame.Direct3DSurface);

softwareBitmap = SoftwareBitmap.Convert(softwareBitmap, BitmapPixelFormat.Bgra8, BitmapAlphaMode.Premultiplied);

}

else

{

/*

softwareBitmap = inputVideoFrame.SoftwareBitmap;

softwareBitmap = new SoftwareBitmap(

inputVideoFrame.SoftwareBitmap.BitmapPixelFormat,

inputVideoFrame.SoftwareBitmap.PixelWidth,

inputVideoFrame.SoftwareBitmap.PixelHeight,

inputVideoFrame.SoftwareBitmap.BitmapAlphaMode);

inputVideoFrame.SoftwareBitmap.CopyTo(softwareBitmap);*/

softwareBitmap = SoftwareBitmap.Convert(inputVideoFrame.SoftwareBitmap, BitmapPixelFormat.Bgra8, BitmapAlphaMode.Premultiplied);

}

frameRenderer.RenderFrame(softwareBitmap);

});

}

/// <summary>

/// Launch file picker for user to select a picture file and return a VideoFrame

/// </summary>

/// <returns>VideoFrame instanciated from the selected image file</returns>

public static IAsyncOperation<VideoFrame> LoadVideoFrameFromFilePickedAsync()

{

return AsyncInfo.Run(async (token) =>

{

// Trigger file picker to select an image file

FileOpenPicker fileOpenPicker = new FileOpenPicker();

fileOpenPicker.SuggestedStartLocation = PickerLocationId.PicturesLibrary;

fileOpenPicker.FileTypeFilter.Add(".jpg");

fileOpenPicker.FileTypeFilter.Add(".png");

fileOpenPicker.ViewMode = PickerViewMode.Thumbnail;

StorageFile selectedStorageFile = await fileOpenPicker.PickSingleFileAsync();

if (selectedStorageFile == null)

{

return null;

}

return await LoadVideoFrameFromStorageFileAsync(selectedStorageFile);

});

}

/// <summary>

/// Decode image from a StorageFile and return a VideoFrame

/// </summary>

/// <param name="file"></param>

/// <returns></returns>

public static IAsyncOperation<VideoFrame> LoadVideoFrameFromStorageFileAsync(StorageFile file)

{

return AsyncInfo.Run(async (token) =>

{

VideoFrame resultFrame = null;

SoftwareBitmap softwareBitmap;

using (IRandomAccessStream stream = await file.OpenAsync(FileAccessMode.Read))

{

// Create the decoder from the stream

BitmapDecoder decoder = await BitmapDecoder.CreateAsync(stream);

// Get the SoftwareBitmap representation of the file in BGRA8 format

softwareBitmap = await decoder.GetSoftwareBitmapAsync();

softwareBitmap = SoftwareBitmap.Convert(softwareBitmap, BitmapPixelFormat.Bgra8, BitmapAlphaMode.Premultiplied);

}

// Encapsulate the image in the WinML image type (VideoFrame) to be bound and evaluated

resultFrame = VideoFrame.CreateWithSoftwareBitmap(softwareBitmap);

return resultFrame;

});

}

/// <summary>

/// Launch file picker for user to select a file and save a VideoFrame to it

/// </summary>

/// <param name="frame"></param>

/// <returns></returns>

public static IAsyncAction SaveVideoFrameToFilePickedAsync(VideoFrame frame)

{

return AsyncInfo.Run(async (token) =>

{

// Trigger file picker to select an image file

FileSavePicker fileSavePicker = new FileSavePicker();

fileSavePicker.SuggestedStartLocation = PickerLocationId.PicturesLibrary;

fileSavePicker.FileTypeChoices.Add("image file", new List<string>() { ".jpg" });

fileSavePicker.SuggestedFileName = "NewImage";

StorageFile selectedStorageFile = await fileSavePicker.PickSaveFileAsync();

if (selectedStorageFile == null)

{

return;

}

using (IRandomAccessStream stream = await selectedStorageFile.OpenAsync(FileAccessMode.ReadWrite))

{

VideoFrame frameToEncode = frame;

BitmapEncoder encoder = await BitmapEncoder.CreateAsync(BitmapEncoder.JpegEncoderId, stream);

if (frameToEncode.SoftwareBitmap == null)

{

Debug.Assert(frame.Direct3DSurface != null);

frameToEncode = new VideoFrame(BitmapPixelFormat.Bgra8, frame.Direct3DSurface.Description.Width, frame.Direct3DSurface.Description.Height);

await frame.CopyToAsync(frameToEncode);

}

encoder.SetSoftwareBitmap(

frameToEncode.SoftwareBitmap.BitmapPixelFormat.Equals(BitmapPixelFormat.Bgra8) ?

frameToEncode.SoftwareBitmap

: SoftwareBitmap.Convert(frameToEncode.SoftwareBitmap, BitmapPixelFormat.Bgra8));

await encoder.FlushAsync();

}

});

}

}

public sealed class FrameRenderer

{

private Image _imageElement;

private SoftwareBitmap _backBuffer;

private bool _taskRunning = false;

public FrameRenderer(Image imageElement)

{

_imageElement = imageElement;

_imageElement.Source = new SoftwareBitmapSource();

}

public void RenderFrame(SoftwareBitmap softwareBitmap)

{

if (softwareBitmap != null)

{

// Swap the processed frame to _backBuffer and trigger UI thread to render it

_backBuffer = softwareBitmap;

// Changes to xaml ImageElement must happen in UI thread through Dispatcher

var task = _imageElement.Dispatcher.RunAsync(CoreDispatcherPriority.Normal,

async () =>

{

// Don't let two copies of this task run at the same time.

if (_taskRunning)

{

return;

}

_taskRunning = true;

try

{

var imageSource = (SoftwareBitmapSource)_imageElement.Source;

await imageSource.SetBitmapAsync(_backBuffer);

//MainPage._RenderFPS += 1;

}

catch (Exception ex)

{

Debug.WriteLine(ex.Message);

}

_taskRunning = false;

});

}

else

{

var task = _imageElement.Dispatcher.RunAsync(CoreDispatcherPriority.Normal,

async () =>

{

var imageSource = (SoftwareBitmapSource)_imageElement.Source;

await imageSource.SetBitmapAsync(null);

});

}

}

}

}

Ini helper class dari Microsoft yang membantu kita untuk melakukan cropping gambar, load video frame, save frame, renderframe ke image element, dsb.

Buat lagi class dengan nama "SkillHelper", masukan kode berikut:

// Copyright (C) Microsoft Corporation. All rights reserved.

using Microsoft.AI.Skills.SkillInterfacePreview;

namespace SkillHelper

{

public static class SkillHelperMethods

{

/// <summary>

/// Construct a string from the ISkillDescriptor specified that can be used to display its content

/// </summary>

/// <param name="desc"></param>

/// <returns></returns>

public static string GetSkillDescriptorString(ISkillDescriptor desc)

{

if (desc == null)

{

return "";

}

return $"Description: {desc.Description}" +

$"\nAuthor: {desc.Version.Author}" +

$"\nPublisher: {desc.Version.Publisher}" +

$"\nVersion: {desc.Version.Major}.{desc.Version.Minor}" +

$"\nUnique ID: {desc.Id}";

}

/// <summary>

/// Construct a string from the ISkillFeatureDescriptor specified that can be used to display its content

/// </summary>

/// <param name="desc"></param>

/// <returns></returns>

public static string GetSkillFeatureDescriptorString(ISkillFeatureDescriptor desc)

{

if (desc == null)

{

return "";

}

string result = $"Name: {desc.Name}" +

$"\nDescription: {desc.Description}" +

$"\nIsRequired: {desc.IsRequired}" +

$"\nType: {desc.FeatureKind}";

if (desc is ISkillFeatureImageDescriptor)

{

ISkillFeatureImageDescriptor imageDesc = desc as ISkillFeatureImageDescriptor;

result += $"\nWidth: {imageDesc.Width}" +

$"\nHeight: {imageDesc.Height}" +

$"\nSupportedBitmapPixelFormat: {imageDesc.SupportedBitmapPixelFormat}" +

$"\nSupportedBitmapAlphaMode: {imageDesc.SupportedBitmapAlphaMode}";

}

else if (desc is ISkillFeatureTensorDescriptor)

{

ISkillFeatureTensorDescriptor tensorDesc = desc as ISkillFeatureTensorDescriptor;

result += $"\nElementKind: {tensorDesc.ElementKind}" +

"\nShape: [";

for (int i = 0; i < tensorDesc.Shape.Count; i++)

{

result += $"{tensorDesc.Shape[i]}";

if (i < tensorDesc.Shape.Count - 1)

{

result += ", ";

}

}

result += "]";

}

else if (desc is ISkillFeatureMapDescriptor)

{

ISkillFeatureMapDescriptor mapDesc = desc as ISkillFeatureMapDescriptor;

result += $"\nKeyElementKind: {mapDesc.KeyElementKind}" +

$"\nValueElementKind: {mapDesc.ValueElementKind}" +

$"\nValidKeys:";

foreach (var validKey in mapDesc.ValidKeys)

{

result += $"\n\t{validKey}";

}

}

return result;

}

}

}

Class helper dari Microsoft ini juga membantu untuk mendeskripsikan info tentang skill mulai dari author dan parameter input/output yang dibutuhkan oleh skill.

Lalu buka Mainpage.xaml, replace kodenya dengan kode berikut:

<Page

x:Class="NeuralStyleApp.MainPage"

xmlns="http://schemas.microsoft.com/winfx/2006/xaml/presentation"

xmlns:x="http://schemas.microsoft.com/winfx/2006/xaml"

xmlns:controls="using:Microsoft.Toolkit.Uwp.UI.Controls"

xmlns:local="using:NeuralStyleApp"

xmlns:d="http://schemas.microsoft.com/expression/blend/2008"

xmlns:mc="http://schemas.openxmlformats.org/markup-compatibility/2006"

mc:Ignorable="d"

Background="{ThemeResource ApplicationPageBackgroundThemeBrush}">

<Page.Resources>

<SolidColorBrush x:Key="TranslucentBlackBrush" Color="Black" Opacity="0.3"/>

<Style x:Key="TextBlockStyling" TargetType="TextBlock">

<Setter Property="Foreground" Value="Black"/>

</Style>

</Page.Resources>

<ScrollViewer Background="{ThemeResource ApplicationPageBackgroundThemeBrush}">

<StackPanel>

<controls:Expander VerticalAlignment="Top"

Margin="0,0,0,0"

Header="Details and options.."

HorizontalContentAlignment="Stretch"

Foreground="Black"

Background="#FF7F7F7F"

BorderBrush="Black"

IsExpanded="False"

ExpandDirection="Down">

<StackPanel>

<TextBlock Name="UISkillName" Text="Skill name" HorizontalAlignment="Center" FontSize="16" FontWeight="Bold"/>

<TextBlock Text="Skill Description:" FontWeight="Bold"/>

<TextBox Name="UISkillDescription" Text="Skill description" IsReadOnly="True" AcceptsReturn="True"/>

<TextBlock Text="Skill input descriptions:" FontWeight="Bold"/>

<TextBox Name="UISkillInputDescription" IsReadOnly="True" AcceptsReturn="True"/>

<TextBlock Text="Skill output descriptions:" FontWeight="Bold"/>

<TextBox Name="UISkillOutputDescription" IsReadOnly="True" AcceptsReturn="True"/>

<TextBlock Text="Available execution devices:" FontWeight="Bold"/>

<ListBox Name="UISkillExecutionDevices" SelectionChanged="UISkillExecutionDevices_SelectionChanged" />

</StackPanel>

</controls:Expander>

<Grid >

<Grid>

<Grid.RowDefinitions>

<RowDefinition Height="80"/>

<RowDefinition Height="300*"/>

<RowDefinition Height="30"/>

</Grid.RowDefinitions>

<!--Status and result display-->

<StackPanel x:Name="UIStatusPanel" Background="#BFFFFFFF" VerticalAlignment="Top" Grid.Row="0">

<ContentControl Name="UIModelControls">

<StackPanel Orientation="Horizontal">

<ListBox Name="UIStyleList" SelectionChanged="UIStyleList_SelectionChanged">

<ListBox.ItemsPanel>

<ItemsPanelTemplate>

<VirtualizingStackPanel Orientation="Horizontal"/>

</ItemsPanelTemplate>

</ListBox.ItemsPanel>

</ListBox>

</StackPanel>

</ContentControl>

<!--Image preview and acquisition control-->

<ContentControl Name="UIImageControls"

VerticalAlignment="Stretch"

HorizontalAlignment="Stretch"

IsEnabled="False">

<StackPanel Orientation="Horizontal">

<Button Name="UIButtonLiveStream"

ToolTipService.ToolTip="Camera preview"

Click="UIButtonLiveStream_Click">

<Button.Content>

<SymbolIcon Symbol="Video"/>

</Button.Content>

</Button>

<Button Name="UIButtonAcquireImage"

ToolTipService.ToolTip="Take a photo"

Click="UIButtonAcquireImage_Click">

<Button.Content>

<SymbolIcon Symbol="Camera"/>

</Button.Content>

</Button>

<Button Name="UIButtonFilePick"

ToolTipService.ToolTip="Select an image from a file"

Click="UIButtonFilePick_Click">

<Button.Content>

<SymbolIcon Symbol="OpenFile"/>

</Button.Content>

</Button>

<Button Name="UIButtonInking"

ToolTipService.ToolTip="Draw on a canvas"

Click="UIButtonInking_Click">

<Button.Content>

<SymbolIcon Symbol="Edit"/>

</Button.Content>

</Button>

<Button Name="UIButtonSaveImage"

ToolTipService.ToolTip="Save the image result to a file"

IsEnabled="false"

VerticalAlignment="Bottom"

Click="UIButtonSaveImage_Click"

Background="#FF939393" >

<Button.Content>

<SymbolIcon Symbol="Save"/>

</Button.Content>

</Button>

</StackPanel>

</ContentControl>

</StackPanel>

<!--<StackPanel Orientation="Horizontal">-->

<!--<Viewbox Stretch="Uniform" HorizontalAlignment="Stretch" VerticalAlignment="Stretch" Grid.Row="1">-->

<Grid Grid.Row="1">

<Grid.ColumnDefinitions>

<ColumnDefinition Width="200*"/>

<ColumnDefinition Width="200*"/>

</Grid.ColumnDefinitions>

<Viewbox Stretch="Uniform">

<StackPanel Name="UIInkControls"

Visibility="Collapsed"

Grid.Column="0">

<InkToolbar TargetInkCanvas="{x:Bind UIInkCanvasInput}" VerticalAlignment="Top" />

<!--inking canvas-->

<Viewbox Stretch="Uniform"

MaxWidth="720"

MaxHeight="720">

<Grid BorderBrush="Black"

BorderThickness="1">

<Grid Name="UIInkGrid"

Background="White"

MinWidth="200"

MinHeight="200"

MaxWidth="720"

MaxHeight="720">

<InkCanvas Name="UIInkCanvasInput"/>

</Grid>

</Grid>

</Viewbox>

</StackPanel>

</Viewbox>

<!--Camera preview-->

<MediaPlayerElement Name="UIMediaPlayerElement"

Stretch="Uniform"

AreTransportControlsEnabled="False"

Canvas.ZIndex="-1"

MaxWidth="720"

MaxHeight="720"

Grid.Column="0"/>

<Image Name="UIInputImage"

Grid.Column="0"

Stretch="Uniform"

MaxWidth="720"

MaxHeight="720"/>

<Image Name="UIResultImage"

Grid.Column="1"

Stretch="Uniform"

MaxWidth="720"

MaxHeight="720"/>

</Grid>

<!--</Viewbox>-->

<StackPanel Name="UICameraSelectionControls" Orientation="vertical" Visibility="Collapsed" VerticalAlignment="Bottom" HorizontalAlignment="Left" Grid.Row="1">

<TextBlock Text="Camera: " Style="{StaticResource TextBlockStyling}"/>

<ComboBox Name="UICmbCamera" SelectionChanged="UICmbCamera_SelectionChanged" Foreground="White" >

<ComboBox.Background>

<SolidColorBrush Color="Black" Opacity="0.3"/>

</ComboBox.Background>

</ComboBox>

<TextBlock Text="Preview resolution: " Style="{StaticResource TextBlockStyling}"/>

<TextBlock Name="UITxtBlockPreviewProperties" Text="0x0" Style="{StaticResource TextBlockStyling}"/>

</StackPanel>

<Border x:Name="UIStatusBorder" Grid.Row="2">

<StackPanel Orientation="Horizontal">

<TextBlock x:Name="StatusBlock"

Text="Select a style to begin"

FontWeight="Bold"

Width="700"

Margin="10,2,0,0"

TextWrapping="Wrap" />

<TextBlock

Text="Capture FPS"

Margin="10,2,0,0"

FontWeight="Bold" />

<TextBlock x:Name="CaptureFPS"

Text="0 fps"

Margin="10,2,0,0"

TextWrapping="Wrap" />

<TextBlock

Text="Render FPS"

Margin="10,2,0,0"

FontWeight="Bold"/>

<TextBlock x:Name="RenderFPS"

Text="0 fps"

Margin="10,2,0,0"

TextWrapping="Wrap" />

</StackPanel>

</Border>

</Grid>

</Grid>

<ProgressRing Name="UIProcessingProgressRing"

MaxWidth="720"

MaxHeight="720"

IsActive="false"

Visibility="Collapsed"/>

<TextBox Name="UISkillOutputDetails" Text="Skill output var description" IsReadOnly="True" AcceptsReturn="True"/>

</StackPanel>

</ScrollViewer>

</Page>

Nah secara tampilan, ada 3 bagian utama. Bagian pertama adalah expander berisi deskripsi dari skill, dan pemilihan device yang disupport. Bagian kedua adalah panel berisi beberapa button untuk pemilihan style dan jenis input (camera, ink, video-mediaplayer, gambar-filepicker).

Lalu kita masukan kode behindnya di file MainPage.cs dengan kode berikut:

using Microsoft.AI.Skills.SkillInterfacePreview;

using NeuralStyleTransformer;

using System;

using System.Collections.Generic;

using System.Diagnostics;

using System.IO;

using System.Linq;

using System.Runtime.InteropServices.WindowsRuntime;

using System.Threading;

using System.Threading.Tasks;

using Windows.Foundation;

using Windows.Foundation.Collections;

using Windows.Graphics.Imaging;

using Windows.Media;

using Windows.Media.Capture;

using Windows.Media.Capture.Frames;

using Windows.Media.Core;

using Windows.Media.MediaProperties;

using Windows.Media.Playback;

using Windows.Storage;

using Windows.Storage.Pickers;

using Windows.Storage.Streams;

using Windows.UI.Core;

using Windows.UI.Popups;

using Windows.UI.Xaml;

using Windows.UI.Xaml.Controls;

using Windows.UI.Xaml.Controls.Primitives;

using Windows.UI.Xaml.Data;

using Windows.UI.Xaml.Input;

using Windows.UI.Xaml.Media;

using Windows.UI.Xaml.Media.Imaging;

using Windows.UI.Xaml.Navigation;

// The Blank Page item template is documented at https://go.microsoft.com/fwlink/?LinkId=402352&clcid=0x409

namespace NeuralStyleApp

{

/// <summary>

/// An empty page that can be used on its own or navigated to within a Frame.

/// </summary>

public sealed partial class MainPage : Page

{

// Camera related

private MediaCapture _mediaCapture;

private MediaPlayer _mediaPlayer;

private List<MediaFrameSourceGroup> _mediaFrameSourceGroupList;

private MediaFrameSourceGroup _selectedMediaFrameSourceGroup;

private MediaFrameSource _selectedMediaFrameSource;

private MediaFrameReader _modelInputFrameReader;

// States

private bool _isReadyForEval = true;

private bool _isProcessingFrames = false;

private bool _showInitialImageAndProgress = true;

private SemaphoreSlim _evaluationLock = new SemaphoreSlim(1);

private SemaphoreSlim _frameAquisitionLock = new SemaphoreSlim(1);

private DispatcherTimer inkEvaluationDispatcherTimer;

private bool _isrocessingImages = true;

private bool _proceedWithEval = true;

// Rendering related

private FrameRenderer _resultframeRenderer;

private FrameRenderer _inputFrameRenderer;

// WinML related

private readonly List<string> _kModelFileNames = new List<string>

{

"Candy","Mosaic","Pointilism", "RainPrincess", "Udnie"

};

private const string _kDefaultImageFileName = "DefaultImage.jpg";

private List<string> _labels = new List<string>();

VideoFrame _inputFrame = null;

VideoFrame _outputFrame = null;

// Debug

private Stopwatch _perfStopwatch = new Stopwatch(); // performance Stopwatch used throughout

private DispatcherTimer _FramesPerSecondTimer = new DispatcherTimer();

private long _CaptureFPS = 0;

public static long _RenderFPS = 0;

private int _LastFPSTick = 0;

// Skill-related variables

private NeuralStyleTransformerDescriptor m_skillDescriptor = null;

private NeuralStyleTransformerSkill m_skill = null;

private NeuralStyleTransformerBinding m_binding = null;

// UI-related variables

private SoftwareBitmapSource m_bitmapSource = new SoftwareBitmapSource(); // used to render an image from a file

private IReadOnlyList<ISkillExecutionDevice> m_availableExecutionDevices = null;

// Synchronization

private SemaphoreSlim m_lock = new SemaphoreSlim(1);

/// <summary>

/// MainPage constructor

/// </summary>

public MainPage()

{

this.InitializeComponent();

}

protected async override void OnNavigatedTo(NavigationEventArgs e)

{

Debug.WriteLine("OnNavigatedTo");

try

{

// Instatiate skill descriptor to display details about the skill and populate UI

m_skillDescriptor = new NeuralStyleTransformerDescriptor();

m_availableExecutionDevices = await m_skillDescriptor.GetSupportedExecutionDevicesAsync();

// Show skill description members in UI

UISkillName.Text = m_skillDescriptor.Name;

UISkillDescription.Text = SkillHelper.SkillHelperMethods.GetSkillDescriptorString(m_skillDescriptor);

int featureIndex = 0;

foreach (var featureDesc in m_skillDescriptor.InputFeatureDescriptors)

{

UISkillInputDescription.Text += SkillHelper.SkillHelperMethods.GetSkillFeatureDescriptorString(featureDesc);

if (featureIndex++ < m_skillDescriptor.InputFeatureDescriptors.Count - 1)

{

UISkillInputDescription.Text += "\n----\n";

}

}

featureIndex = 0;

foreach (var featureDesc in m_skillDescriptor.OutputFeatureDescriptors)

{

UISkillOutputDescription.Text += SkillHelper.SkillHelperMethods.GetSkillFeatureDescriptorString(featureDesc);

if (featureIndex++ < m_skillDescriptor.OutputFeatureDescriptors.Count - 1)

{

UISkillOutputDescription.Text += "\n----\n";

}

}

if (m_availableExecutionDevices.Count == 0)

{

UISkillOutputDetails.Text = "No execution devices available, this skill cannot run on this device";

}

else

{

// Display available execution devices

UISkillExecutionDevices.ItemsSource = m_availableExecutionDevices.Select((device) => device.Name).ToList();

UISkillExecutionDevices.SelectedIndex = 0;

// Alow user to interact with the app

UIButtonFilePick.IsEnabled = true;

UIButtonFilePick.Focus(FocusState.Keyboard);

}

}

catch (Exception ex)

{

await new MessageDialog(ex.Message).ShowAsync();

}

_resultframeRenderer = new FrameRenderer(UIResultImage);

_inputFrameRenderer = new FrameRenderer(UIInputImage);

UIStyleList.ItemsSource = _kModelFileNames;

UIInkCanvasInput.InkPresenter.InputDeviceTypes =

CoreInputDeviceTypes.Mouse

| CoreInputDeviceTypes.Pen

| CoreInputDeviceTypes.Touch;

UIInkCanvasInput.InkPresenter.UpdateDefaultDrawingAttributes(

new Windows.UI.Input.Inking.InkDrawingAttributes()

{

Color = Windows.UI.Colors.Black,

Size = new Size(8, 8),

IgnorePressure = true,

IgnoreTilt = true,

}

);

// Select first style

UIStyleList.SelectedIndex = 0;

// Create a 1 second timer

_FramesPerSecondTimer.Tick += _FramesPerSecond_Tick;

_FramesPerSecondTimer.Interval = new TimeSpan(0, 0, 1);

_FramesPerSecondTimer.Start();

}

private void _FramesPerSecond_Tick(object sender, object e)

{

// how many seconds has it been?

// Note: we do this math since even though we asked for the event to be

// dispatched every 1s , due to timing and delays, it might not come

// exactly every second. and on a busy system it could even be a couple of

// seconds until it is delivered.

int fpsTick = System.Environment.TickCount;

if (_LastFPSTick > 0)

{

float numberOfSeconds = ((float)(fpsTick - _LastFPSTick)) / (float)1000;

// how many frames did we capture?

float intervalFPS = ((float)_CaptureFPS) / numberOfSeconds;

if (intervalFPS == 0.0)

return;

NotifyUser(CaptureFPS, $"{intervalFPS:F1}", NotifyType.StatusMessage);

// how many frames did we render

intervalFPS = ((float)_RenderFPS) / numberOfSeconds;

if (intervalFPS == 0.0)

return;

NotifyUser(RenderFPS, $"{intervalFPS:F1}", NotifyType.StatusMessage);

}

_CaptureFPS = 0;

_RenderFPS = 0;

_LastFPSTick = fpsTick;

}

public void NotifyUser(string strMessage, NotifyType type)

{

NotifyUser(StatusBlock, strMessage, type);

}

/// <summary>

/// Display a message to the user.

/// This method may be called from any thread.

/// </summary>

/// <param name="strMessage"></param>

/// <param name="type"></param>

public void NotifyUser(TextBlock block, string strMessage, NotifyType type)

{

// If called from the UI thread, then update immediately.

// Otherwise, schedule a task on the UI thread to perform the update.

if (Dispatcher.HasThreadAccess)

{

UpdateStatus(block, strMessage, type);

}

else

{

var task = Dispatcher.RunAsync(CoreDispatcherPriority.Normal, () => UpdateStatus(block, strMessage, type));

task.AsTask().Wait();

}

}

/// <summary>

/// Update the status message displayed on the UI

/// </summary>

/// <param name="strMessage"></param>

/// <param name="type"></param>

private void UpdateStatus(TextBlock block, string strMessage, NotifyType type)

{

switch (type)

{

case NotifyType.StatusMessage:

UIStatusBorder.Background = new SolidColorBrush(Windows.UI.Colors.Green);

break;

case NotifyType.ErrorMessage:

UIStatusBorder.Background = new SolidColorBrush(Windows.UI.Colors.Red);

break;

}

block.Text = strMessage;

// Collapse the TextBlock if it has no text to conserve real estate.

UIStatusBorder.Visibility = (block.Text != String.Empty) ? Visibility.Visible : Visibility.Collapsed;

if (block.Text != String.Empty)

{

UIStatusBorder.Visibility = Visibility.Visible;

UIStatusPanel.Visibility = Visibility.Visible;

}

else

{

UIStatusBorder.Visibility = Visibility.Collapsed;

UIStatusPanel.Visibility = Visibility.Collapsed;

}

}

/// <summary>

/// Acquire manually an image from the camera preview stream

/// </summary>

/// <param name="sender"></param>

/// <param name="e"></param>

private async void UIButtonAcquireImage_Click(object sender, RoutedEventArgs e)

{

Debug.WriteLine("UIButtonAcquireImage_Click");

_evaluationLock.Wait();

{

_proceedWithEval = false;

await CleanupCameraAsync();

CleanupInk();

}

_evaluationLock.Release();

UIInputImage.Visibility = Visibility.Visible;

_showInitialImageAndProgress = true;

UIImageControls.IsEnabled = false;

UIModelControls.IsEnabled = false;

CameraCaptureUI dialog = new CameraCaptureUI();

dialog.PhotoSettings.AllowCropping = false;

dialog.PhotoSettings.Format = CameraCaptureUIPhotoFormat.Png;

StorageFile file = await dialog.CaptureFileAsync(CameraCaptureUIMode.Photo);

if (file != null)

{

var vf = await ImageHelper.LoadVideoFrameFromStorageFileAsync(file);

await Task.Run(() =>

{

_proceedWithEval = true;

EvaluateVideoFrameAsync(vf).ConfigureAwait(false).GetAwaiter().GetResult();

});

}

UIImageControls.IsEnabled = true;

UIModelControls.IsEnabled = true;

}

/// <summary>

/// Select and evaluate a picture

/// </summary>

/// <param name="sender"></param>

/// <param name="e"></param>

private async void UIButtonFilePick_Click(object sender, RoutedEventArgs e)

{

Debug.WriteLine("UIButtonFilePick_Click");

_evaluationLock.Wait();

{

_proceedWithEval = false;

await CleanupCameraAsync();

CleanupInk();

}

_evaluationLock.Release();

UIInputImage.Visibility = Visibility.Visible;

_showInitialImageAndProgress = true;

_isrocessingImages = true;

UIImageControls.IsEnabled = false;

UIModelControls.IsEnabled = false;

try

{

VideoFrame inputFrame = null;

// use a default image

if (sender == null && e == null)

{

if (_inputFrame == null)

{

var file = await StorageFile.GetFileFromApplicationUriAsync(new Uri($"ms-appx:///Assets/{_kDefaultImageFileName}"));

inputFrame = await ImageHelper.LoadVideoFrameFromStorageFileAsync(file);

}

else

{

inputFrame = _inputFrame;

}

}

else

{

// Load image to VideoFrame

inputFrame = await ImageHelper.LoadVideoFrameFromFilePickedAsync();

}

if (inputFrame == null)

{

NotifyUser("no valid image file selected", NotifyType.ErrorMessage);

}

else

{

await Task.Run(() =>

{

_proceedWithEval = true;

EvaluateVideoFrameAsync(inputFrame).ConfigureAwait(false).GetAwaiter().GetResult();

});

}

}

catch (Exception ex)

{

Debug.WriteLine($"error: {ex.Message}");

NotifyUser(ex.Message, NotifyType.ErrorMessage);

}

UIImageControls.IsEnabled = true;

UIModelControls.IsEnabled = true;

}

/// <summary>

/// 1) Bind input and output features

/// 2) Run evaluation of the model

/// 3) Report the result

/// </summary>

/// <param name="inputVideoFrame"></param>

/// <returns></returns>

private async Task EvaluateVideoFrameAsync(VideoFrame inputVideoFrame)

{

Debug.WriteLine("EvaluateVideoFrameAsync");

bool isReadyForEval = false;

bool showInitialImageAndProgress = true;

bool proceedWithEval = false;

_evaluationLock.Wait();

{

isReadyForEval = _isReadyForEval;

_isReadyForEval = false;

showInitialImageAndProgress = _showInitialImageAndProgress;

proceedWithEval = _proceedWithEval;

}

_evaluationLock.Release();

if ((inputVideoFrame != null) &&

(inputVideoFrame.SoftwareBitmap != null || inputVideoFrame.Direct3DSurface != null) &&

isReadyForEval &&

proceedWithEval)

{

try

{

_perfStopwatch.Restart();

NotifyUser("Processing...", NotifyType.StatusMessage);

await Dispatcher.RunAsync(CoreDispatcherPriority.Normal, async() =>

{

if (showInitialImageAndProgress)

{

UIProcessingProgressRing.IsActive = true;

UIProcessingProgressRing.Visibility = Visibility.Visible;

UIButtonSaveImage.IsEnabled = false;

}

// Crop the input image to communicate appropriately to the user what is being evaluated

_inputFrame = await ImageHelper.CenterCropImageAsync(inputVideoFrame, NeuralStyleTransformerConst.IMAGE_WIDTH, NeuralStyleTransformerConst.IMAGE_HEIGHT);

_perfStopwatch.Stop();

Int64 cropTime = _perfStopwatch.ElapsedMilliseconds;

Debug.WriteLine($"Image handling: {cropTime}ms");

// Bind and Eval

if (_inputFrame != null)

{

_evaluationLock.Wait();

try

{

_perfStopwatch.Restart();

await m_binding.SetInputImageAsync(_inputFrame);

Int64 bindTime = _perfStopwatch.ElapsedMilliseconds;

Debug.WriteLine($"Binding: {bindTime}ms");

// render the input frame

if (showInitialImageAndProgress)

{

await ImageHelper.RenderFrameAsync(_inputFrameRenderer, _inputFrame);

}

// Process the frame with the model

_perfStopwatch.Restart();

await m_skill.EvaluateAsync(m_binding);

_perfStopwatch.Stop();

Int64 evalTime = _perfStopwatch.ElapsedMilliseconds;

Debug.WriteLine($"Eval: {evalTime}ms");

_outputFrame = (m_binding["OutputImage"].FeatureValue as SkillFeatureImageValue).VideoFrame;

UISkillOutputDetails.Text = "";

await ImageHelper.RenderFrameAsync(_resultframeRenderer, _outputFrame);

}

catch (Exception ex)

{

NotifyUser(ex.Message, NotifyType.ErrorMessage);

Debug.WriteLine(ex.ToString());

}

finally

{

_evaluationLock.Release();

}

if (showInitialImageAndProgress)

{

UIProcessingProgressRing.IsActive = false;

UIProcessingProgressRing.Visibility = Visibility.Collapsed;

UIButtonSaveImage.IsEnabled = true;

}

NotifyUser("Done!", NotifyType.StatusMessage);

}

else

{

Debug.WriteLine("Skipped eval, null input frame");

}

});

}

catch (Exception ex)

{

NotifyUser(ex.Message, NotifyType.ErrorMessage);

Debug.WriteLine(ex.ToString());

}

_evaluationLock.Wait();

{

_isReadyForEval = true;

}

_evaluationLock.Release();

_perfStopwatch.Reset();

}

}

/// <summary>

/// Change style to apply to the input frame

/// </summary>

/// <param name="sender"></param>

/// <param name="e"></param>

private async void UIStyleList_SelectionChanged(object sender, SelectionChangedEventArgs e)

{

Debug.WriteLine("UIStyleList_SelectionChanged");

if (UIStyleList.SelectedIndex < 0)

{

return;

}

var selection = UIStyleList.SelectedIndex;

UIModelControls.IsEnabled = false;

UIImageControls.IsEnabled = false;

m_skillDescriptor.SetStyle((StyleChoices)selection);

// Initialize skill with the selected supported device

var sel = m_availableExecutionDevices[UISkillExecutionDevices.SelectedIndex];

m_skill = await m_skillDescriptor.CreateSkillAsync(sel) as NeuralStyleTransformerSkill;

// Instantiate a binding object that will hold the skill's input and output resource

m_binding = await m_skill.CreateSkillBindingAsync() as NeuralStyleTransformerBinding;

await Dispatcher.RunAsync(CoreDispatcherPriority.Normal, () =>

{

NotifyUser($"Ready to stylize! ", NotifyType.StatusMessage);

UIImageControls.IsEnabled = true;

UIModelControls.IsEnabled = true;

if (_isrocessingImages)

{

UIButtonFilePick_Click(null, null);

}

});

}

/// <summary>

/// Save image result to file

/// </summary>

/// <param name="sender"></param>

/// <param name="e"></param>

private async void UIButtonSaveImage_Click(object sender, RoutedEventArgs e)

{

Debug.WriteLine("UIButtonSaveImage_Click");

await ImageHelper.SaveVideoFrameToFilePickedAsync(_outputFrame);

}

/// <summary>

/// Apply effect on ink handdrawn

/// </summary>

/// <param name="sender"></param>

/// <param name="e"></param>

private async void UIButtonInking_Click(object sender, RoutedEventArgs e)

{

Debug.WriteLine("UIButtonInking_Click");

_evaluationLock.Wait();

{

_proceedWithEval = false;

await CleanupCameraAsync();

CleanupInk();

CleanupInputImage();

}

_evaluationLock.Release();

UIInkControls.Visibility = Visibility.Visible;

UIResultImage.Width = UIInkControls.Width;

UIResultImage.Height = UIInkControls.Height;

_showInitialImageAndProgress = false;

UIImageControls.IsEnabled = false;

UIModelControls.IsEnabled = false;

inkEvaluationDispatcherTimer = new DispatcherTimer();

inkEvaluationDispatcherTimer.Tick += async (object a, object b) =>

{

if (!_frameAquisitionLock.Wait(100))

{

return;

}

{

if (_isProcessingFrames)

{

_frameAquisitionLock.Release();

return;

}

_isProcessingFrames = true;

}

_frameAquisitionLock.Release();

try

{

if (UIInkControls.Visibility != Visibility.Visible)

{

throw (new Exception("invisible control, will not attempt rendering"));

}

// Render the ink control to an image

RenderTargetBitmap renderBitmap = new RenderTargetBitmap();

await renderBitmap.RenderAsync(UIInkGrid);

var buffer = await renderBitmap.GetPixelsAsync();

var softwareBitmap = SoftwareBitmap.CreateCopyFromBuffer(buffer, BitmapPixelFormat.Bgra8, renderBitmap.PixelWidth, renderBitmap.PixelHeight, BitmapAlphaMode.Ignore);

buffer = null;

renderBitmap = null;

// Instantiate VideoFrame using the softwareBitmap of the ink

VideoFrame vf = VideoFrame.CreateWithSoftwareBitmap(softwareBitmap);

// Evaluate the VideoFrame

await Task.Run(() =>

{

EvaluateVideoFrameAsync(vf).ConfigureAwait(false).GetAwaiter().GetResult();

_frameAquisitionLock.Wait();

{

_isProcessingFrames = false;

}

_frameAquisitionLock.Release();

});

}

catch (Exception ex)

{

Debug.WriteLine(ex.Message);

}

};

inkEvaluationDispatcherTimer.Interval = new TimeSpan(0, 0, 0, 0, 33);

inkEvaluationDispatcherTimer.Start();

_evaluationLock.Wait();

{

_proceedWithEval = true;

}

_evaluationLock.Release();

UIImageControls.IsEnabled = true;

UIModelControls.IsEnabled = true;

}

/// <summary>

/// Cleanup inking resources

/// </summary>

private void CleanupInk()

{

Debug.WriteLine("CleanupInk");

_frameAquisitionLock.Wait();

try

{

if (inkEvaluationDispatcherTimer != null)

{

inkEvaluationDispatcherTimer.Stop();

inkEvaluationDispatcherTimer = null;

}

UIInkCanvasInput.InkPresenter.StrokeContainer.Clear();

UIInkControls.Visibility = Visibility.Collapsed;

_showInitialImageAndProgress = true;

_isProcessingFrames = false;

}

catch (Exception ex)

{

Debug.WriteLine($"CleanupInk: {ex.Message}");

}

finally

{

_frameAquisitionLock.Release();

}

}

/// <summary>

/// Cleanup input image resources

/// </summary>

private void CleanupInputImage()

{

Debug.WriteLine("CleanupInputImage");

_frameAquisitionLock.Wait();

try

{

UIInputImage.Visibility = Visibility.Collapsed;

_isrocessingImages = false;

}

finally

{

_frameAquisitionLock.Release();

}

}

/// <summary>

/// Apply effect in real time to a camera feed

/// </summary>

/// <param name="sender"></param>

/// <param name="e"></param>

private async void UIButtonLiveStream_Click(object sender, RoutedEventArgs e)

{

Debug.WriteLine("UIButtonLiveStream_Click");

_evaluationLock.Wait();

{

_proceedWithEval = false;

await CleanupCameraAsync();

CleanupInk();

CleanupInputImage();

}

_evaluationLock.Release();

await InitializeMediaCaptureAsync();

}

/// <summary>

/// Initialize MediaCapture for live stream scenario

/// </summary>

/// <returns></returns>

private async Task InitializeMediaCaptureAsync()

{

Debug.WriteLine("InitializeMediaCaptureAsync");

_frameAquisitionLock.Wait();

try

{

// Find the sources

var allGroups = await MediaFrameSourceGroup.FindAllAsync();

_mediaFrameSourceGroupList = allGroups.Where(group => group.SourceInfos.Any(sourceInfo => sourceInfo.SourceKind == MediaFrameSourceKind.Color

&& (sourceInfo.MediaStreamType == MediaStreamType.VideoPreview

|| sourceInfo.MediaStreamType == MediaStreamType.VideoRecord))).ToList();

}

catch (Exception ex)

{

Debug.WriteLine(ex.Message);

NotifyUser(ex.Message, NotifyType.ErrorMessage);

_mediaFrameSourceGroupList = null;

}

finally

{

_frameAquisitionLock.Release();

}

if ((_mediaFrameSourceGroupList == null) || (_mediaFrameSourceGroupList.Count == 0))

{

// No camera sources found

Debug.WriteLine("No Camera found");

NotifyUser("No Camera found", NotifyType.ErrorMessage);

return;

}

var cameraNamesList = _mediaFrameSourceGroupList.Select(group => group.DisplayName);

UICmbCamera.ItemsSource = cameraNamesList;

UICmbCamera.SelectedIndex = 0;

}

/// <summary>

/// Start previewing from the camera

/// </summary>

private void StartPreview()

{

Debug.WriteLine("StartPreview");

_selectedMediaFrameSource = _mediaCapture.FrameSources.FirstOrDefault(source => source.Value.Info.MediaStreamType == MediaStreamType.VideoPreview

&& source.Value.Info.SourceKind == MediaFrameSourceKind.Color).Value;

if (_selectedMediaFrameSource == null)

{

_selectedMediaFrameSource = _mediaCapture.FrameSources.FirstOrDefault(source => source.Value.Info.MediaStreamType == MediaStreamType.VideoRecord

&& source.Value.Info.SourceKind == MediaFrameSourceKind.Color).Value;

}

// if no preview stream are available, bail

if (_selectedMediaFrameSource == null)

{

return;

}

_mediaPlayer = new MediaPlayer();

_mediaPlayer.RealTimePlayback = true;

_mediaPlayer.AutoPlay = true;

_mediaPlayer.Source = MediaSource.CreateFromMediaFrameSource(_selectedMediaFrameSource);

UIMediaPlayerElement.SetMediaPlayer(_mediaPlayer);

UITxtBlockPreviewProperties.Text = string.Format("{0}x{1}@{2}, {3}",

_selectedMediaFrameSource.CurrentFormat.VideoFormat.Width,

_selectedMediaFrameSource.CurrentFormat.VideoFormat.Height,

_selectedMediaFrameSource.CurrentFormat.FrameRate.Numerator + "/" + _selectedMediaFrameSource.CurrentFormat.FrameRate.Denominator,

_selectedMediaFrameSource.CurrentFormat.Subtype);

UICameraSelectionControls.Visibility = Visibility.Visible;

UIMediaPlayerElement.Visibility = Visibility.Visible;

UIResultImage.Width = UIMediaPlayerElement.Width;

UIResultImage.Height = UIMediaPlayerElement.Height;

}

/// <summary>

/// A new frame from the camera is available

/// </summary>

/// <param name="sender"></param>

/// <param name="args"></param>

private void _modelInputFrameReader_FrameArrived(MediaFrameReader sender, MediaFrameArrivedEventArgs args)

{

Debug.WriteLine("_modelInputFrameReader_FrameArrived");

MediaFrameReference frame = null;

if (_isProcessingFrames)

{

return;

}

// Do not attempt processing of more than 1 frame at a time

_frameAquisitionLock.Wait();

{

_isProcessingFrames = true;

_CaptureFPS += 1;

try

{

frame = sender.TryAcquireLatestFrame();

}

catch (Exception ex)

{

Debug.WriteLine(ex.Message);

NotifyUser(ex.Message, NotifyType.ErrorMessage);

frame = null;

}

if ((frame != null) && (frame.VideoMediaFrame != null))

{

VideoFrame vf = null;

// Receive frames from the camera and transfer to system memory

_perfStopwatch.Restart();

SoftwareBitmap softwareBitmap = frame.VideoMediaFrame.SoftwareBitmap;

if (softwareBitmap == null) // frames are coming as Direct3DSurface

{

Debug.Assert(frame.VideoMediaFrame.Direct3DSurface != null);

vf = VideoFrame.CreateWithDirect3D11Surface(frame.VideoMediaFrame.Direct3DSurface);

}

else

{

vf = VideoFrame.CreateWithSoftwareBitmap(softwareBitmap);

}

EvaluateVideoFrameAsync(vf).ConfigureAwait(false).GetAwaiter().GetResult();

}

Thread.Sleep(500);

_isProcessingFrames = false;

}

_frameAquisitionLock.Release();

}

/// <summary>

/// On selected camera changed

/// </summary>

/// <param name="sender"></param>

/// <param name="e"></param>

private async void UICmbCamera_SelectionChanged(object sender, SelectionChangedEventArgs e)

{

Debug.WriteLine("UICmbCamera_SelectionChanged");

if ((_mediaFrameSourceGroupList.Count == 0) || (UICmbCamera.SelectedIndex < 0))

{

return;

}

_evaluationLock.Wait();

{

_proceedWithEval = false;

await CleanupCameraAsync();

CleanupInk();

CleanupInputImage();

}

_evaluationLock.Release();

UIImageControls.IsEnabled = false;

UIModelControls.IsEnabled = false;

_frameAquisitionLock.Wait();

try

{

_selectedMediaFrameSourceGroup = _mediaFrameSourceGroupList[UICmbCamera.SelectedIndex];

// Create MediaCapture and its settings

_mediaCapture = new MediaCapture();

var settings = new MediaCaptureInitializationSettings

{

SourceGroup = _selectedMediaFrameSourceGroup,

PhotoCaptureSource = PhotoCaptureSource.Auto,

MemoryPreference = MediaCaptureMemoryPreference.Cpu,

StreamingCaptureMode = StreamingCaptureMode.Video

};

// Initialize MediaCapture

await _mediaCapture.InitializeAsync(settings);

StartPreview();

if (m_skillDescriptor != null)

{

await InitializeModelInputFrameReaderAsync();

}

_evaluationLock.Wait();

{

_proceedWithEval = true;

}

_evaluationLock.Release();

}

catch (Exception ex)

{

NotifyUser(ex.Message, NotifyType.ErrorMessage);

Debug.WriteLine(ex.ToString());

}

finally

{

_frameAquisitionLock.Release();

}

UIImageControls.IsEnabled = true;

UIModelControls.IsEnabled = true;

}

/// <summary>

/// Initialize the camera frame reader and preview UI element

/// </summary>

/// <returns></returns>

private async Task InitializeModelInputFrameReaderAsync()

{

Debug.WriteLine("InitializeModelInputFrameReaderAsync");

// Create the MediaFrameReader

try

{

if (_modelInputFrameReader != null)

{

await _modelInputFrameReader.StopAsync();

_modelInputFrameReader.FrameArrived -= _modelInputFrameReader_FrameArrived;

}

string frameReaderSubtype = _selectedMediaFrameSource.CurrentFormat.Subtype;

if (string.Compare(frameReaderSubtype, MediaEncodingSubtypes.Nv12, true) != 0 &&

string.Compare(frameReaderSubtype, MediaEncodingSubtypes.Bgra8, true) != 0 &&

string.Compare(frameReaderSubtype, MediaEncodingSubtypes.Yuy2, true) != 0 &&

string.Compare(frameReaderSubtype, MediaEncodingSubtypes.Rgb32, true) != 0)

{

frameReaderSubtype = MediaEncodingSubtypes.Bgra8;

}

_modelInputFrameReader = null;

_modelInputFrameReader = await _mediaCapture.CreateFrameReaderAsync(_selectedMediaFrameSource, frameReaderSubtype);

_modelInputFrameReader.AcquisitionMode = MediaFrameReaderAcquisitionMode.Realtime;

_isProcessingFrames = false;

await _modelInputFrameReader.StartAsync();

_modelInputFrameReader.FrameArrived += _modelInputFrameReader_FrameArrived;

_showInitialImageAndProgress = false;

}

catch (Exception ex)

{

NotifyUser($"Error while initializing MediaframeReader: " + ex.Message, NotifyType.ErrorMessage);

Debug.WriteLine(ex.ToString());

}

}

/// <summary>

/// Cleanup camera used for live stream scenario

/// </summary>

private async Task CleanupCameraAsync()

{

Debug.WriteLine("CleanupCameraAsync");

_frameAquisitionLock.Wait();

try

{

_showInitialImageAndProgress = true;

_isProcessingFrames = false;

if (_modelInputFrameReader != null)

{

_modelInputFrameReader.FrameArrived -= _modelInputFrameReader_FrameArrived;

}

_modelInputFrameReader = null;

if (_mediaCapture != null)

{

_mediaCapture = null;

}

if (_mediaPlayer != null)

{

_mediaPlayer.Source = null;

_mediaPlayer = null;

}

}

catch (Exception ex)

{

Debug.WriteLine($"CleanupCameraAsync: {ex.Message}");

}

finally

{

_frameAquisitionLock.Release();

}

await Dispatcher.RunAsync(CoreDispatcherPriority.Normal, () =>

{

UICameraSelectionControls.Visibility = Visibility.Collapsed;

UIMediaPlayerElement.Visibility = Visibility.Collapsed;

});

}

private void UISkillExecutionDevices_SelectionChanged(object sender, SelectionChangedEventArgs e)

{

Debug.WriteLine("UIToggleInferenceDevice_Toggled");

if (UIStyleList == null)

{

return;

}

UIStyleList_SelectionChanged(null, null);

}

}

public enum NotifyType

{

StatusMessage,

ErrorMessage

};

}

Nah dari kode yang cukup banyak tersebut, kita tekan-kan pada 3 method yaitu saat aplikasi dibuka method OnNavigatedTo akan dieksekusi, pada method tersebut terlihat cara membaca deskripsi dari skill library kita. Lalu saat kita mengatur Style ke pilihan pertama sebagai default, dia akan men-trigger method UIStyleList_SelectionChanged, dapat dilihat pada method ini kita meng-inisiasi skill dan binding kita berdasarkan style dan device (CPU, GPU) yang dipilih. Dan yang terakhir adalah method EvaluateVideoFrameAsync, dalam method ini kita memasukan input, melakukan binding output, lalu melakukan evaluasi (inferensi) model. Jadi apapun sumber inputnya (camera, ink canvas, media player, gambar dari file picker) kita extract menjadi single VideoFrame, baru kita proses.

Nah jangan lupa buka file Package.appsmanifest lalu pada tab “Capability” centang Pictures Library dan WebCam. Untuk mengijinkan akses ke camera dan folder.

Source code lengkapnya bisa rekan-rekan download dari sini.

Semoga bermanfaat, artikel ini juga menutup series kita tentang VisionSkills. Sampai bertemu pada series lainnya.

Salam Makers ;D

![]()